The shader has a main block like any other C program which in this case is very simple. In this case we are saying that, starting from the position 0, we are expecting to receive a vector composed of 3 attributes (x, y, z). So, first we need to get that chunk into something that’s meaningful to us. When we fill the buffer, we define the buffer chunks that are going to be processed by the shader. The vertex shader is just receiving an array of floats. It can be a position, a position with some additional information or whatever we want. From the point of view of the shader it is expecting to receive a buffer with data. Data in an OpenGL buffer can be whatever we want, that is, the language does not force you to pass a specific data structure with a predefined semantic. The second line specifies the input format for this shader.

The following table relates the GLSL version, the OpenGL that matches that version and the directive to use (Wikipedia: ). The first line is a directive that states the version of the GLSL language we are using. First we will create a file named “ vertex.vs” (The extension is for Vertex Shader) under the resources directory with the following content: #version 330 Shaders are written by using the GLSL language (OpenGL Shading Language) which is based on ANSI C. So let's start writing our first shader program. The input data can be processes in parallel in order to generate the final scene. Keep in mind that 3D cards are designed to parallelize all the operations described above.

It holds the value of each pixel that should be drawn to the screen. The framebuffer is the final result of the graphics pipeline.

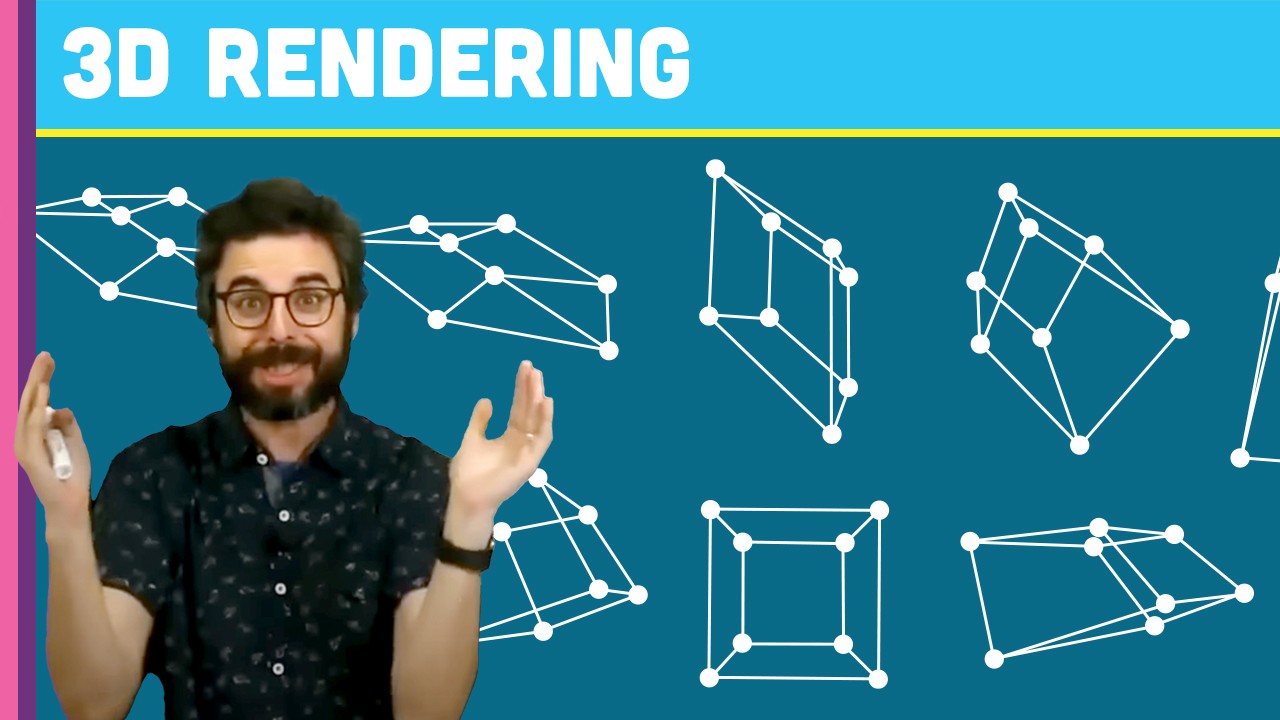

Those fragments are used during the fragment processing stage by the fragment shader to generate pixels assigning them the final color that gets written into the framebuffer. The rasterization stage takes the triangles generated in the previous stages, clips them and transforms them into pixel-sized fragments. This stage can also use a specific shader to group the vertices. It’s a simple geometric shape that can be combined and transformed to construct complex 3D scenes. Why triangles? A triangle is like the basic work unit for graphic cards. It does so by taking into consideration the order in which the vertices were stored and grouping them using different models. The geometry processing stage connects the vertices that are transformed by the vertex shader to form triangles. This shader can generate also other outputs related to colour or texture, but its main goal is to project the vertices into the screen space, that is, to generate dots. Those vertices are processed by the vertex shader whose main purpose is to calculate the projected position of each vertex into the screen space. And what is a Vertex Buffer? A Vertex Buffer is another data structure that packs all the vertices that need to be rendered, by using vertex arrays, and makes that information available to the shaders in the graphics pipeline. And how do you describe a point in a 3D space? By specifying its x, y and z coordinates. But, what is a vertex? A vertex is a data structure that describes a point in 2D or 3D space. The rendering starts taking as its input a list of vertices in the form of Vertex Buffers. The following picture depicts a simplified version of the OpenGL programmable pipeline: In this model, the different steps that compose the graphics pipeline can be controlled or programmed by using a set of specific programs called shaders. OpenGL 2.0 introduced the concept of programmable pipeline. The graphics pipeline was composed of these steps: Thus, the effects and operations that could be applied were limited by the API itself (for instance, “set fog” or “add light”, but the implementation of those functions were fixed and could not be changed). The programmer was constrained to the set of functions available for each step. This model employed a set of steps in the rendering process which defined a fixed set of operations. First versions of OpenGL employed a model which was called fixed-function pipeline. The sequence of steps that ends up drawing a 3D representation into your 2D screen is called the graphics pipeline.

#Java 3d rendering code#

Modern OpenGL lets you think in one problem at a time and it lets you organize your code and processes in a more logical way. It is actually simpler and much more flexible. Let me give you an advice for those of you that think that way.

You may end up thinking that drawing a simple shape to the screen should not require so many concepts and lines of code. If you are used to older versions of OpenGL, that is fixed-function pipeline, you may end this chapter wondering why it needs to be so complex. In this chapter we will learn the processes that takes place while rendering a scene using OpenGL.

0 kommentar(er)

0 kommentar(er)